Services

Our services are designed to help life science companies translate genomic data into medicine.

We work with a variety of data types, including but not limited to:

Genomic

Spatial Transcriptomics

Epigenetic

Single Cell RNA-seq

RNA-seq/transcriptomic

16S & 18S

Proteomic

Meta-genomic

Clinical

Diagnostic

GXP compliant

FHIR standard

CFR 21 part 11 compliant

Flexible Staffing Solutions

Tap into our network of biologists, computational scientists, software engineers, bioinformaticians, business strategists, and executive leaders. Whether you’re a startup or a global corporation, we provide flexible staffing solutions tailored to your specific needs.

Here’s how we can help:

- Rely on our team’s bench science experience that ensures a faster and more efficient match

- Find the right talent instantly with our deep talent pool

- Avoid high management overhead by letting us handle recruitment, onboarding, and management

- Customize your support with engagement lengths and weekly commitment levels to perfectly match your project requirements, whether that’s for weeks, months, or years

- Predict costs with straight-forward pricing models

Don’t let staffing challenges hinder your progress. Partner with us to access the talent you need, when you need it. Contact us today to discuss your specific requirements and discover how our flexible staffing solutions can help you achieve your goals.

Bioinformatics & Computational Biology

We realize your biological data’s full value to answer your research questions with speed and certainty.

You need an expert to understand and analyze your biological data, and we’re uniquely qualified to perform your bioinformatics analysis. We’ve built a team of bioinformaticians with experience at the bench whose core specialty is understanding and analyzing biological data. We start with the end in mind, unearthing biological insights and discoveries hidden in the data.

Here’s how we can help:

- Build a custom script guided by your computational and bioinformatic specifications

- Advise on experimental design, library preparation, and sequencing platforms to help you generate high-quality sequencing data

- Pre-process data to ensure validity by removing errors, inconsistencies, and/or outliers

- Analyze data to answer specific computational biology and bioinformatics research questions

- Summarize the most compelling findings to help you determine the best next steps

- Deliver data in desired format (e.g., tables, visualizations, GUIs, etc.)

- Educate the wider R&D team to help them understand how we generated the results, what they mean, and what to do next

We’ll make post-analysis recommendations to help you unlock deeper insights beyond traditional bioinformatics analysis.

Deeper Biological Understanding:

- Annotation: Comprehensive annotation of single cell datasets to identify cellular heterogeneity in the sample

- Differential Gene Expression: Identify genes with altered expression levels between different cell types and/ or conditions

- Pathway Analysis: Delineate biological pathways using a list of dysregulated genes in order to understand the underlying mechanisms

Predictive Modeling & Actionable Insights:

- Trajectory Analysis: Track cellular changes over time to understand disease progression and potential intervention points

- Network Analysis: Construct networks maps to visualize interactions between genes and/ or sequences and identify key regulators

- AI-Powered Models: Develop deep learning prediction models of disease risk, treatment response, and patient outcomes

- Multi-omics Integration: Combine data from multiple sources (genomics, proteomics, metabolomics) for a holistic view of disease complexity

Our clients decide what deems the project complete: we either conduct the analysis and present our findings, or we also generate a pipeline using a workflow management system.

In essence, a bioinformatics pipeline is a series of interconnected computational steps designed to process and analyze biological data. Think of it like an assembly line in a factory, where each station performs a specific task on the product as it moves along. In our case, the ‘product’ is your genomic data, and each step in the pipeline performs a crucial analysis or transformation.

After we analyze your genomic data, providing you with a pipeline empowers you to:

- Independently verify and reproduce our findings

- Efficiently analyze new data as it becomes available

- Adapt the analysis as your research evolves

- Maintain organized and accessible records of your data and results

Ultimately, a pipeline provides you with the tools and autonomy to continue exploring your genomic data long after our initial analysis is complete. It’s an investment in the future of your research.

By working with Bridge Informatics you benefit from our extensive experience in delivering high-quality solutions to handle the complex and challenging aspects of bioinformatics, so you can focus on your core research goals.

Data Mining

Whether you’re trying to obtain, extract, or identify patterns in existing data, we have the experience and expertise to quickly locate, validate, and analyze the data. We help clients efficiently source and analyze publicly available DNA, RNA, single-cell, epigenetic, and protein data needed to answer their most pressing research questions.

Here’s how we can help:

- Identify the best channels to source credible and relevant public and private data

- Source the right type and quantity of data in line with your requirements

- Execute quality control measures to ensure the format is compatible with your existing data

- Pre-process the data in preparation for analysis (e.g., curation, normalization or scaling)

- Store the mined, preprocessed, and analyzed data in a secure yet accessible cloud environment (like AWS S3)

- Analyze the data to answer your research questions

- Extract insights to inform decision making

Cloud Architecture

Fueling efficiency through data centralization. Streamline how you collect, store, share, reproduce, and search your data.

Your data is your most valuable asset. We prioritize the latest innovations in digital storage technology to ensure our pharmaceutical and biotech clients can securely store and manage their data.

- Build a storage infrastructure on an optimal cloud service provider (Amazon Web Services (AWS), Azure, or GCP) in line with your needs.

- Store your data in an unstructured or structured format.

- Regulate and maintain remote access to confidential data.

- Enable secure access to your confidential data with virtual private networks (VPN) designed to meet your IT standards

- Facilitate fast and secure transfer of genomic and life science data with internal teams and external collaborators

- Remove the manual process to upload data outputs from any instrument (such as an NGS instrument) to a cloud location.

- Create scalable custom data processing and analytics pipelines.

- Tailor virtual machines with the hardware, software, and tools needed for specific projects

- Deploy computationally intensive virtual machines for data processing and analysis

- Rely on us as your trusted partner to manage and maintain your cloud service provider account(s) for cost and resource efficiency

These examples are just the tip of the iceberg

We work with you to build out a custom cloud infrastructure that serves all your specific needs! Contact us for a free consultation to outline your cloud-based or hybrid data infrastructure.

Underdeveloped data management strategies stifle innovation and increase therapy-to-market lead times. We’re helping organizations focus their resources on data-driven innovation. Schedule a complimentary call with our team to learn how we can help store and manage your data safely so you can get back to the bigger picture.

Cloud Maintenance

Ensuring seamless and secure cloud environments.

We leverage cutting-edge advancements in cloud technology to safeguard and manage your critical data. We’ll help evaluate the effectiveness of your system architecture in real-time by benchmarking it against industry best practices to save you time and money.

We’ll provide secure and reliable management solutions tailored to your needs:

- Govern and maintain meticulous access protocols over confidential data to ensure a secure and compliant environment

- Automate backup to ensure data reliability and availability

- Minimize loss as the result of failures with a robust disaster recovery

- Maintain system reliability and performance utilizing pre-emptive measures

- Scale architecture in line with workload demands

- Optimize costs through efficient resource allocation and monitoring to avoid unnecessary expenses

- Optimize operational performance through incident response and problem resolution

- Grant and regulate user(s) access to crucial environment resources and infrastructure

- Monitor anomalies, behavioral intrusions, and traffic flow to ensure proactive identification and response to potential threats

- Administer compliance assessment and implement security per industry standards and evolving threats

Experience the transformative capabilities of streamlined cloud maintenance with our comprehensive solutions, allowing you to concentrate on your core business while we expertly manage, maintain, and optimize your data.

Software & Database Engineering

Helping R&D teams to discover and interpret data patterns

Our full-stack engineers have deep knowledge of both bench science and software development and can help you design and execute the best data analysis strategy for your project by leveraging the power of computational biology. Whether you need a full engineering team or a single bioinformatics expert, we have you covered by offering flexible options that fit your scope. Depending on your needs we can provide specialized services in software development, pipeline development, data analysis and visualization, and project management.

Here’s how we can help:

- Build a roadmap based on the needs of the users as guided by biologists and bioinformaticians

- Develop the application in a safe staging environment

- Deploy and host the application to the designated cloud environment that best suits your needs (AWS, Azure, GCP)

- Secure the application by applying security patches and regular updates

- Monitor and audit the activity and performance of your application and its users

- Maintain the application by correcting issues or bugs to boost performance

Schedule a complimentary call with our team to learn how we can help you understand and analyze biological data.

Publication Services

Publication Services

At Bridge Informatics, we believe your groundbreaking research deserves a compelling voice. We’re not just about producing polished manuscripts – our data scientists (who are experienced bench researchers) act as your dedicated partner in crafting impactful publications that resonate with the right audience and advance your scientific journey.

Here’s how we help with every step of the publication process:

- Brainstorm to collaboratively define your publication goals, identify the most suitable journals and platforms, and develop a personalized roadmap for success

- Discover the hidden narrative in your data transforming your research into a compelling story

- Write manuscript sections (such as methods, results, and discussion) to accurately interpret data

- Revise manuscripts and proofs as per reviewer and editorial requests

We don’t just produce content – we craft impactful stories that elevate your research, accelerate your journey to market, and leave a lasting mark on the scientific community.

Contact us today for a free consultation and unlock the full potential of your scientific voice.

Schedule a complimentary call with our team to learn how we can help you understand and analyze biological data.

Happy Customers

Hear what our incredible customers have to say

- Setup and management of virtual machines to host third-party imaging software with specific hardware and software requirements

- Assisting vendors with the setup of third-party compute clusters

- Cloud resource auditing

- Analysis to support publications

The BI team has been responsive, flexible, and well-organized, and this has enabled our small internal team to focus our efforts on supporting our drug development programs.”

Frequently Asked Questions

When to use bioinformatics?

Bioinformatics has a huge number of applications. It can be used to analyze all kinds of large biological datasets that present a seemingly impossible task to do using Excel or other low-throughput approaches.

What is cloud computing?

Cloud computing is a broad term encompassing data management, data storage, software, and other services and resources hosted on a digital infrastructure platform using the internet.

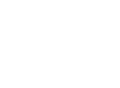

What is an analytical pipeline?

An analytical pipeline is a series of bioinformatic or analytical tasks, in the form of scripts or code, that are run in a predetermined sequence to perform an analysis. Analytical pipelines are rigorously tested to ensure reproducible and reliable results.

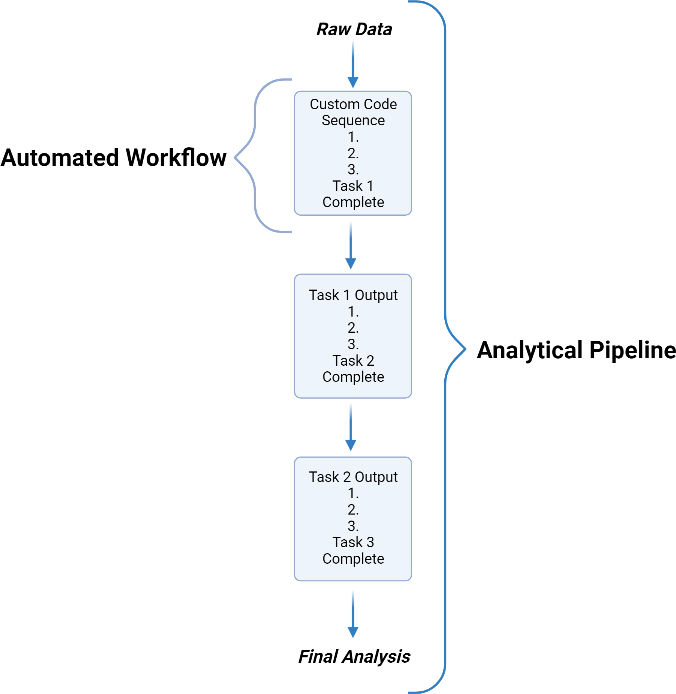

What is bioinformatics as a service (BaaS)?

BaaS involves bioinformaticians, software engineers, and other data scientists, many with years of wet lab experience, working with biologists to create computational tools needed to address a given biological problem. Examples of BaaS are the analysis of single-cell and bulk next-generation sequencing (NGS) data, literature-driven data mining, and custom bioinformatics training for clients. BaaS providers vary in how they execute these tasks – at Bridge Informatics, we provide bioinformatics services in three main areas. The first is bioinformatic analysis, where we can utilize the power of bioinformatics to identify genomic factors that lead to disease, including biomarkers of disease, drug response, and more. Second is analytical pipeline development- see “What is an analytical pipeline?” Third is custom software engineering- see “What is custom-tailored software?”

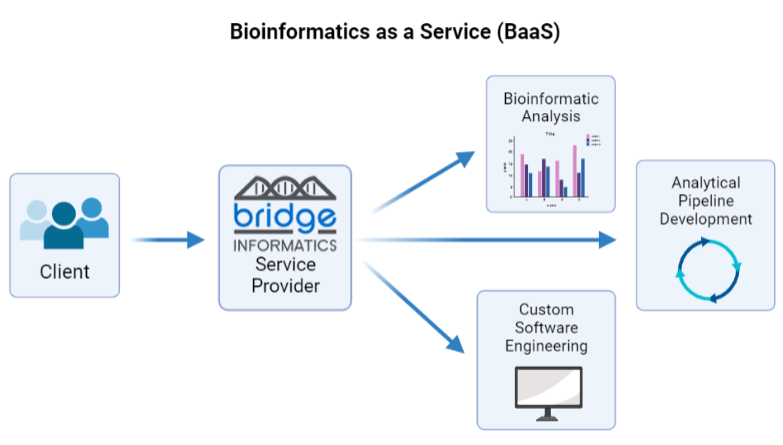

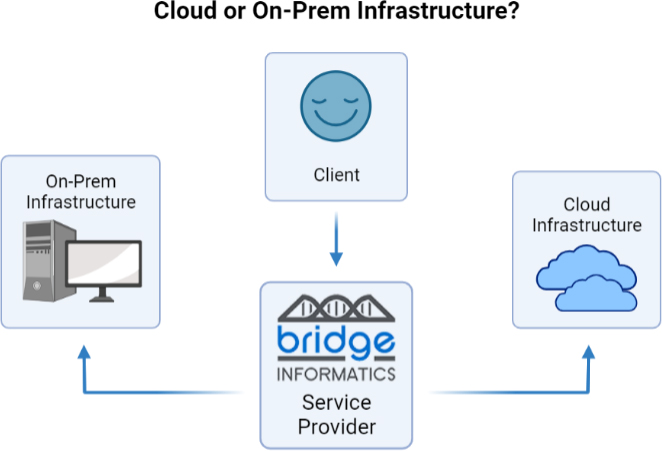

What is infrastructure as a service (IaaS)?

Infrastructure as a service (IaaS) is the creation of on-premise or cloud-based data storage and infrastructure (aka data organization, access, optimization, and integration) custom-tailored to a client’s needs. Service providers like Bridge Informatics have specialists that can create a custom infrastructure for optimal data storage and protection. A quality cloud or hybrid data infrastructure allows for more efficient data collection, storage, sharing, reproducibility, and overall collaboration. IaaS is an essential service for modern life science companies. If you’re curious, book a free discovery call to discuss how we can create custom tools for your data analysis needs.

What are the advantages of bioinformatic services?

In the era of NGS and omics data, a collaboration between bench biologists and computer scientists is essential to uncover new findings and hidden insights. Outsourcing these bioinformatic tasks to Bridge Informatics has many advantages:

- Working with computational scientists who actually “speak biology” and will bridge the gap between the dry and wet lab.

- Saving time for bench researchers and for in-house data scientists.

- Improved quality and reproducibility of results.

- Expertise of BaaS service providers can help you pull out the most significant insights from your data.

What does it mean to build a pipeline?

A bioinformatic or analytical pipeline is a set of analytical steps executed in a predefined sequence to simplify and analyze large and complex biological datasets. Building a pipeline is the process of developing algorithms and testing the individual components to determine the optimal order of execution to produce robust, understandable, and reproducible results.

What is the difference between a programmer and software developer?

A software developer works with the client to develop the project idea, designs the software, and codes the programs involved in the software. The software developer is also involved in the deployment, applications, and maintenance of the software.

What is custom tailored software?

- Custom-tailored software is software that is built specifically for a client’s needs and tasks. There are many advantages to having custom-tailored software built, including increased efficiency, scalability of tasks, and flexibility in what the software can do specific to your needs.

What are the steps of having custom tailored software built?

- Step 1: Work with full-stack software engineers to outline the project goals.

- Step 2: Application development, where the software developers create the programs, pipelines, and applications that will comprise the custom software.

- Step 3: Finally is application deployment, where the finished software is used by the client and carefully maintained by the software developer.

What are the steps of having custom tailored software deployed?

- Step 1: Testing the software at scale and in real-world conditions.

- Step 2: Monitoring the software’s performance.

- Step 3: Maintaining the software, including making any adjustments or additions over time.

How do you maintain applications after deployment?

Maintenance of custom software is one of the most valuable services gained when outsourcing custom-tailored software development, after the software development itself. The team responsible for designing and deploying the software will monitor its performance, ensure it is meeting your needs, and fix any problems that arise. Having a dedicated team for monitoring your new software allows you to focus on your projects and goals while the technical details are handled by our experts.

What is cloud infrastructure versus on-premise infrastructure?

Cloud infrastructure is where software and applications are hosted offsite, in the ‘cloud’- in other words, virtually, on a third-party cloud-based server. This is in contrast to traditional on-premise infrastructure, where applications and software are hosted on the client or service provider’s servers and infrastructure.

What are the different cloud platforms?

There are a huge variety of cloud platforms available, but here at Bridge Informatics, we primarily use:

- AWS: Amazon Web Services, one of the world’s most commonly used cloud platforms.

- Azure: Microsoft Azure, Microsoft’s public cloud computing platform.

- GCP: Google Cloud Platform, Google’s suite of cloud computing services that uses the same infrastructure that Google uses internally.

What are the benefits of custom Cloud solutions?

- Scalability: Custom cloud computing solutions are a great way to scale up from your existing on-premise infrastructure while saving the time and money associated with setting up more physical infrastructure.

- Flexibility: Custom cloud solutions are extremely flexible, and can be scaled up or down and adjusted to a client’s needs as they change over time.

- Security: Data protection is critically important, and there are excellent security measures in place for cloud computing to protect your data.

- Centralization: A huge appeal of custom cloud computing solutions is that data, software, and other critical services you have stored in the cloud can be accessed quickly and easily remotely from a single, centralized location.

What are Automated Workflows?

Automated workflows are essentially the individual tasks within an analytical pipeline that perform all of the necessary steps for one part of the analytical pipeline. Automated workflows improve the consistency and reproducibility of data analysis by allowing the same type of data to be analyzed identically every time.

What are the benefits of Automated Workflow?

- Reproducibility and Reliability: Automated workflows help standardize your outputs after analyzing your raw data to improve the reducibility of your results.

- Saving time: Once an automated workflow is developed and tested, the efficiency of that step in your analysis process is greatly increased.

- Scalability: Increased efficiency means you can scale up your project and data analysis, allowing for better quality results, statistics, and more.

Why do you need Automated Workflow or Pipeline development?

Automated workflow and custom pipeline development is a highly specialized tasks, which is why you need a service provider like Bridge Informatics to undertake these development tasks for you. Having a service provider create your automated workflows and pipelines saves you time while ensuring your pipeline is of the highest quality.

What are the differences between Custom Cloud and Local Pipelines?

Bridge Informatics’ pipelines are second-to-none and can be run either on-premise on our servers or cloud-based platforms. If you don’t have a cloud computing infrastructure, we can create a custom cloud set-up to meet your needs.